Abstract

This paper explores the integration of AI-powered tools in the design and implementation of rubrics for assessing English for Academic Purposes (EAP) courses. By leveraging AI prompts, educators can streamline the rubric creation process, ensuring alignment with course objectives and improving both teaching and learning outcomes. The paper discusses the benefits and challenges associated with rubric use and provides detailed steps on employing generative AI to develop both holistic and analytic rubrics. The findings highlight the importance of adopting AI-driven methodologies to promote fairness, reliability, and efficiency in assessment practices.

Keywords: AI-powered rubric design, English for Academic Purposes (EAP), holistic rubrics, analytic rubrics.

Introduction

Rubrics are a collection of the criteria used to evaluate whether and how a performance meets expectations, which are essential tools in educational assessment. Rubrics provide a structured framework for grading by outlining specific criteria, quality levels, and the adopted scoring strategy (Reddy and Andrade, 2010), thereby helping to enhance transparency and fairness in the evaluation process.

The use of rubrics offers several advantages for both instructors and students. For instructors, rubrics create consistency in assessing student performance by providing a standardized framework that applies uniform criteria across all evaluations (Chen et al., 2013). This consistency helps mitigate biases and subjectivity in grading, leading to more equitable assessments. Rubrics also provide an objective basis for evaluation, which allows instructors to justify their grading decisions with specific, transparent criteria (Campbell, 2005). Such transparency not only supports fairness but also fosters trust between instructors and students. Furthermore, rubrics facilitate alignment between course objectives, instruction, and assessment by explicitly connecting learning goals with evaluation criteria (Jonsson, 2014). This alignment helps instructors in designing assessments that accurately measure the intended learning outcomes. Additionally, the repeated use of rubrics for similar assignments serves as valuable tools for tracking student improvement over time. This ongoing application allows instructors to identify patterns in student performance and adjust instructional strategies accordingly (Allen & Tanner, 2006).

For students, rubrics reduce complaints and increase satisfaction by clarifying expectations clearly and providing a detailed outline of what is required for different levels of performance (Barney et al., 2012). By outlining specific criteria, rubrics reduce ambiguity and help students understand how their work will be evaluated. This clarity helps prevent misunderstandings and disputes about grades. Additionally, Rubrics also enhance the dialogue between instructors and students by serving as a common reference point for discussing assignments and feedback (Menendez-Varela and Gregori-Giralt, 2016). This improved communication fosters a more collaborative learning environment, allowing students to better understand their strengths and areas for improvement. Furthermore, rubrics contribute to improved student performance by encouraging self-regulation and reflection. When students receive detailed feedback based on rubric criteria, they are better equipped to assess their own work, set learning goals, and make targeted improvements (Panadero and Romero, 2014). This process of self-assessment and goal-setting fosters the development of higher-order thinking skills, ultimately contributing to enhanced academic performance.

Despite their advantages, developing effective rubrics can be a time-consuming and challenging task for educators. One of the main difficulties lies in articulating expectations across various levels of quality with precision and clarity. This task requires precise and thoughtful articulation to ensure that each criterion is described in a way that is both understandable and actionable. Educators must balance detail with clarity, ensuring that students can interpret the rubric's criteria accurately while avoiding overly complex or ambiguous language. This process often involves iterative revisions and consultations to achieve the right level of specificity and comprehensibility.

Additionally, as Felder and Brent (2016) suggest, rubric development requires careful attention to four key indicators: validity, reliability, fairness, and efficiency. Validity ensures that the rubric accurately measures the intended learning outcomes and aligns with the educational objectives of the course. Reliability focuses on the consistency of the rubric's assessments, ensuring that the rubric produces similar results across different evaluators and assessment instances. Fairness involves creating a rubric that is equitable, transparent, and unbiased, with clear and understandable criteria and language used, providing all students with an equal chance to succeed based on their performance. Efficiency refers to the ease and speed with which the rubric can be administered, scored, and interpreted, allowing educators to evaluate student work quickly and accurately without sacrificing detail. Achieving an optimal balance among these indicators requires thoughtful design and ongoing evaluation to refine the rubric and enhance its effectiveness in supporting educational goals.

With advancements in artificial intelligence (AI), there is now the potential to streamline and improve the rubric creation process. This paper investigates the application of AI prompts in generating rubrics for EAP courses. By automating certain parts of the rubric design process, educators can focus on fine-tuning and adapting rubrics to meet the specific needs of their students, thereby enhancing the quality of both assessment and the overall learning experience.

Demonstration: Case Studies

The following section outlines how AI prompts can be utilized to create both holistic and analytic rubrics, drawing upon the framework proposed by Nolen (2024) and adapted specifically for use in EAP courses. The Generative AI tool employed in this process is the free version of ChatGPT. To demonstrate the practical application of AI-powered rubric design, two case studies from EAP courses are presented.

Case Study 1: Holistic Rubric for In-class Test (ICT) (EAP047)

Holistic rubrics assess student work as a unified whole, resulting in a single score. This type of rubric is useful when a general judgment of performance is sufficient, such as in large-scale assessments or when time constraints are a concern.

In the EAP047 ICT course, a holistic rubric was generated using the following AI prompts, with the four key indicators considered in each part of the prompt. The process involved three main steps.

Step 1: Define the Task and Learning Objectives

- AI Prompt:

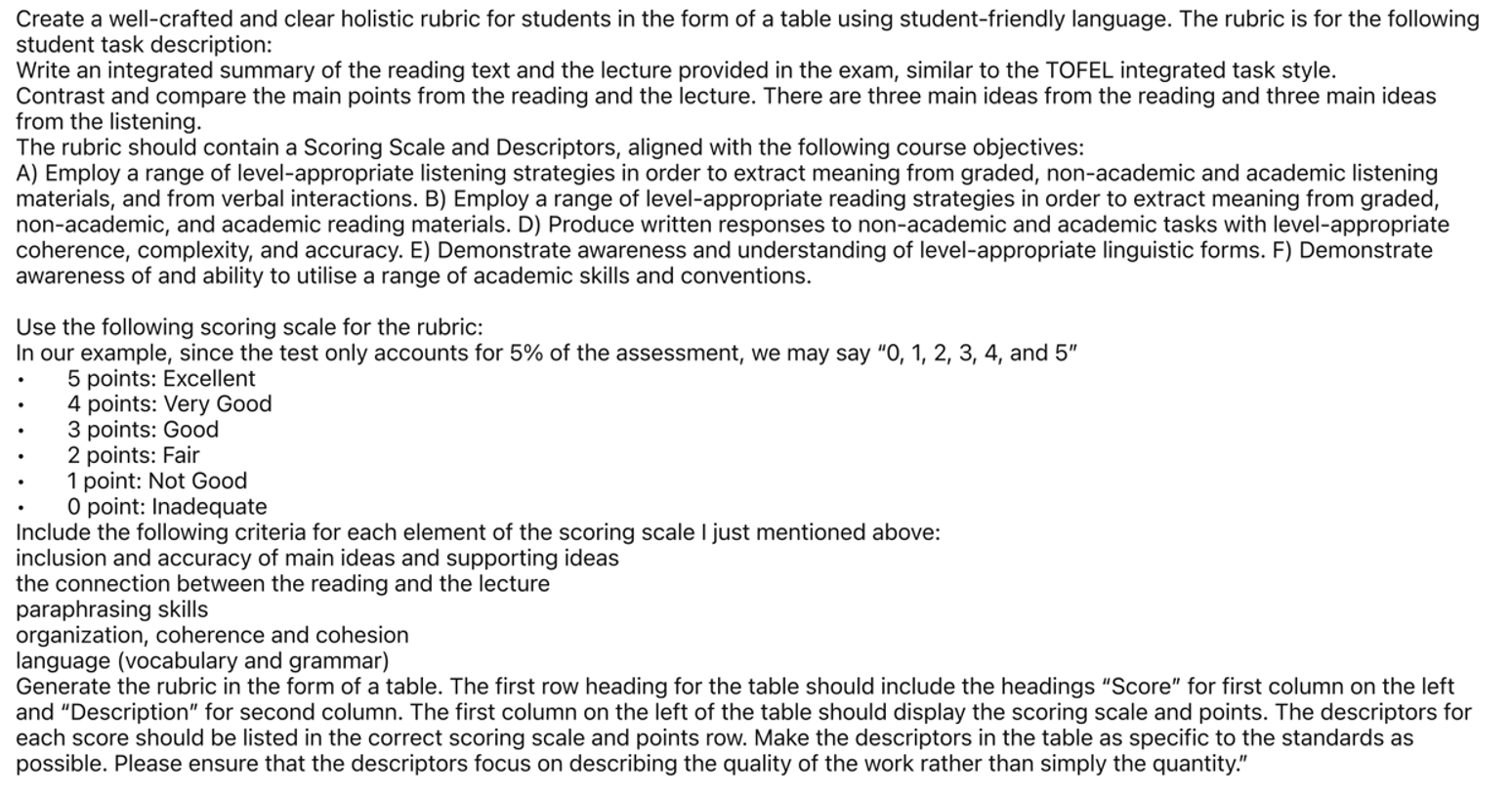

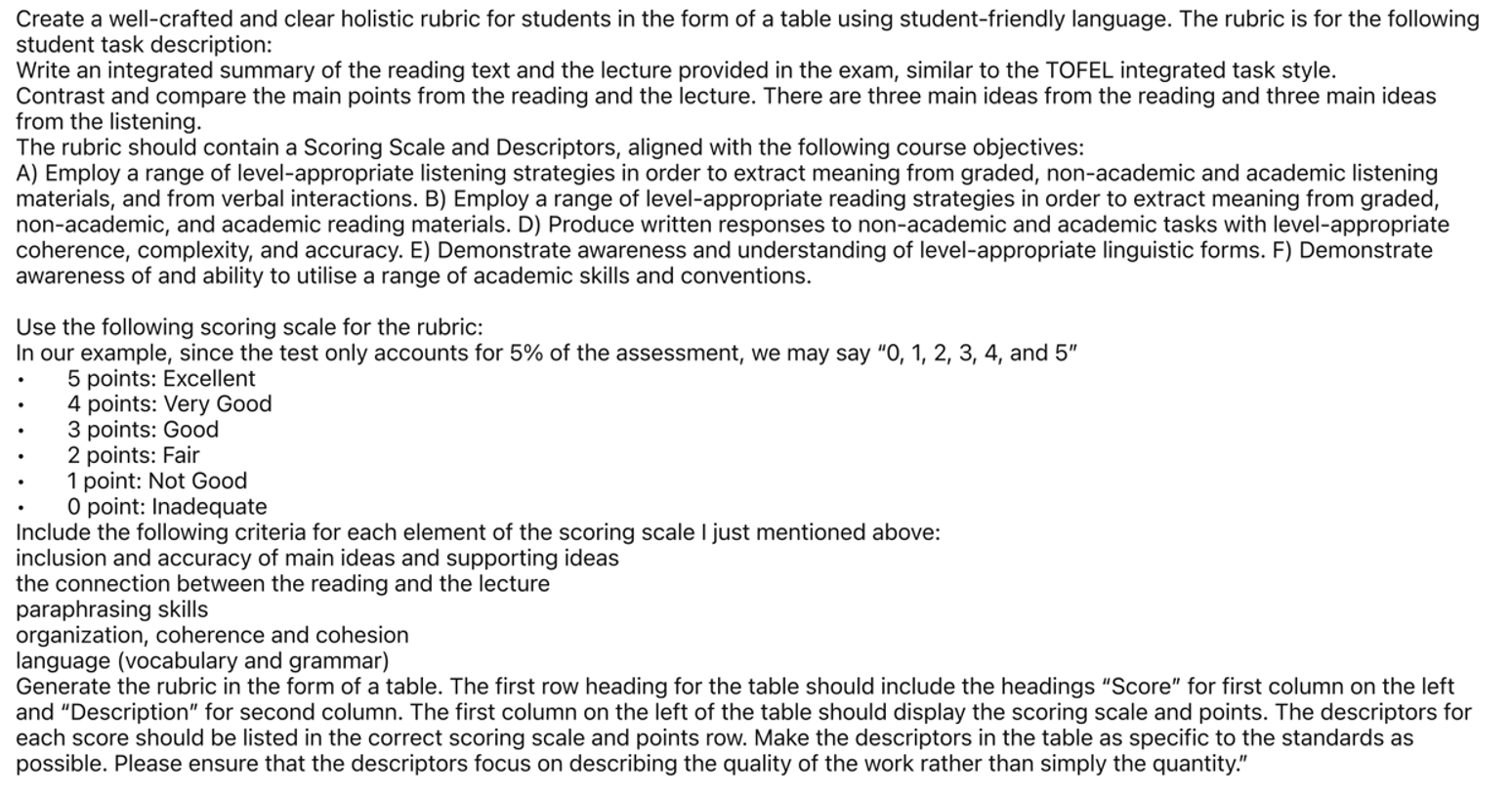

"Create a well-crafted and clear holistic rubric for students in the form of a table using student-friendly language. The rubric is for the following student task description: [Paste in the task description]."

In our case, the task is: “Write an integrated summary of the reading text and the lecture provided in the exam, similar to the TOEFL integrated task style. Contrast and compare the main points from the reading and the lecture. There are three main ideas from the reading and three main ideas from the listening.”

The language and terminology used in rubrics should align with course objectives, so we include the course learning objectives in our prompt:

- AI Prompt:

"The rubric should contain a Scoring Scale and Descriptors, aligned with the following course objectives: [Paste in the course learning objectives]."

In our case, we use objectives like:

"A) Employ a range of level-appropriate listening strategies to extract meaning from graded, non-academic, and academic listening materials, and from verbal interactions.

B) Employ a range of level-appropriate reading strategies to extract meaning from graded, non-academic, and academic reading materials.

D) Produce written responses to non-academic and academic tasks with level-appropriate coherence, complexity, and accuracy.

E) Demonstrate awareness and understanding of level-appropriate linguistic forms.

F) Demonstrate awareness of and ability to utilize a range of academic skills and conventions."

Step 2: Generate the Scoring Scale and Criteria

Next, we specify the type of rubric we need:

- AI Prompt:

"Use the following scoring scale for the rubric: [Paste in the scoring scale] For each scoring scale, generate a descriptor based on the course objectives I have provided. Please ensure that the descriptors focus on describing the quality of the work rather than simply the quantity. For example, descriptors should highlight xx, xx, xx, xx and xxx”

In our case, we can say

“0, 1, 2, 3, 4, and 5

- 5 points: Excellent

- 4 points: Very Good

- 3 points: Good

- 2 points: Fair

- 1 point: Not Good

- 0 point: Inadequate

Include the following criteria for each element of the scoring scale mentioned above: inclusion and accuracy of main ideas and supporting ideas, the connection between the reading and the lecture, paraphrasing skills, organization, coherence and cohesion, and language (vocabulary and grammar)."

Step 3: Ask the AI to Generate the Rubric the Way We Like

Finally, we ask the AI to generate the rubric in table form:

- AI Prompt:

"Generate the rubric in the form of a table. The first row heading should include 'Score' and 'Description'. The first column should display the scoring scale and points. The descriptors for each score should be listed in the corresponding row. Ensure that the descriptors focus on describing the quality of the work rather than simply the quantity (reliability)."

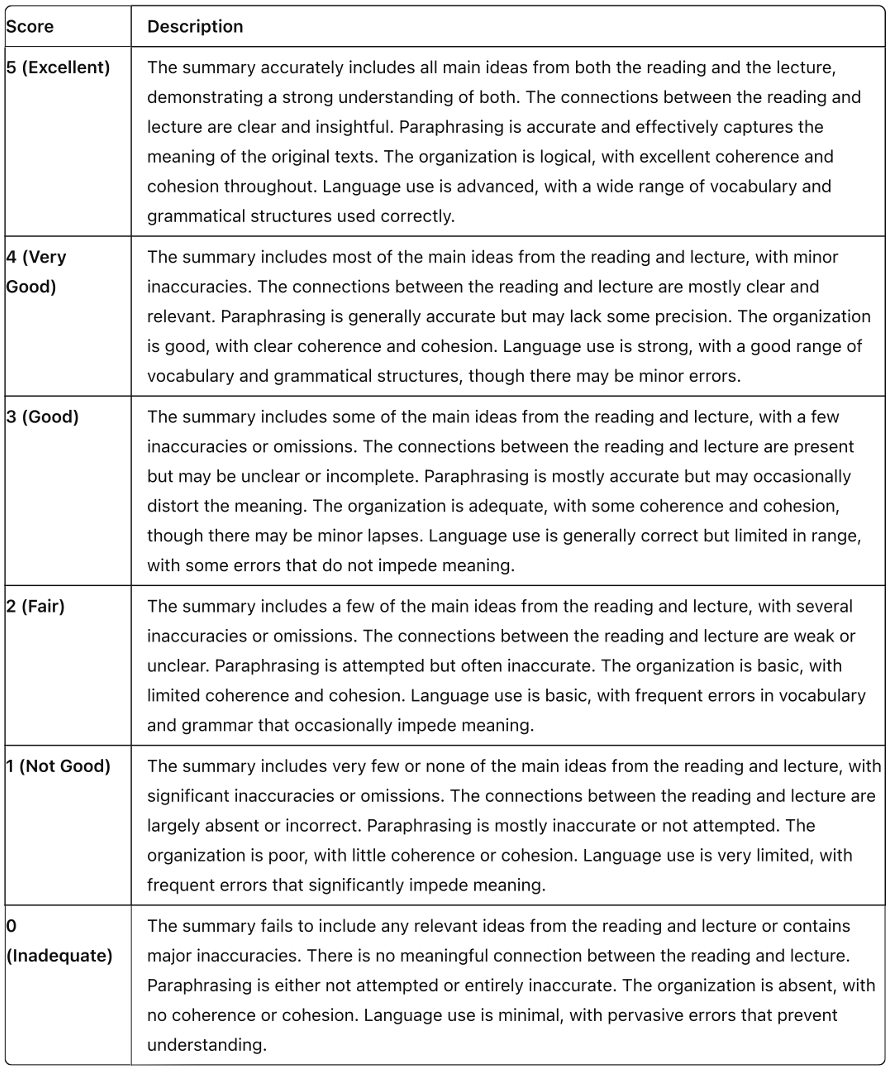

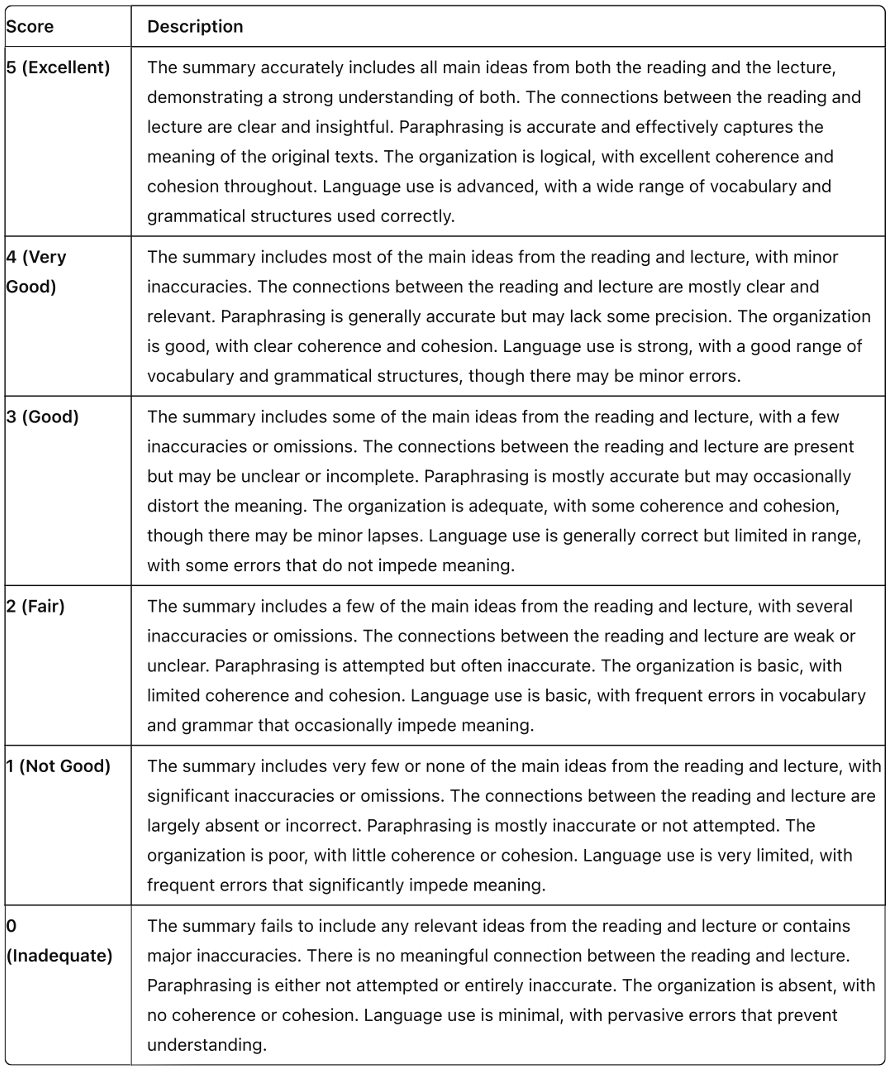

When we put all of the AI prompts together into one prompt (see pic 1), we generated the following rubric (see pic 2).

(Pic 1: The Holistic Prompt)

(Pic 2: Resulting Rubric)

From here, we can refine the rubric or modify our prompt as necessary. For example, in our case, we simplified the rubric descriptors by requesting the AI to “streamline the rubric using bullet points”. Instead of manually spending time creating a rubric for each assignment, this approach allows us to efficiently utilize Generative AI to perform the task on our behalf.

Case Study 2: Analytic Rubric for Research Reports (EAP121)

In the EAP121 course, an analytic rubric was developed to assess research reports. Analytic rubrics decompose the task into separate criteria, each of which is assessed independently. This approach provides more detailed feedback and is particularly beneficial in complex tasks, enabling students to identify specific areas for improvement. Since the language part is already available from the ELC-provided Y2 master descriptor, the following demonstration focuses on the remaining three criteria: Task 1 (idea development), Task 2 (source use and application), and Organization.

Step 1: Define the Task and Learning Objectives

Before generating a rubric, it is essential to clearly define the student task and the corresponding learning objectives. This foundational information ensures that the AI can create a rubric fully aligned with the course’s goals and assessment criteria.

- AI Prompt:

"Create a clear analytic rubric for students in the form of a table. The rubric is for the following task: [Paste in the task description].”

For the EAP121 example, we might frame the task as follows: “Each student is required to submit an individual final report, which will be formally assessed.

Students must use the report format as set out in the Report Writing Guide, i.e., Abstract, Introduction, Method, Results, Discussion, and Conclusion.

The research aims and related hypotheses must be clearly stated.

Sources: You must include at least 6 sources; your sources must be English-language and generally appropriate for academic work (e.g., academic journal articles, reputable news/magazine articles, and academic lectures/videos).

The report must include self-produced 2 – 4 graphs representing data collected in the results section.

The Word Count is 1,300 words (+/- 10%). The title, abstract, reference list and the appendix are NOT included in the count.”

- AI Prompt:

"The rubric should contain a Scoring Scale and Descriptors, aligned with the following course objectives: [Paste in the course learning objectives]."

For the EAP121 example, we can say “Demonstrate a range of academic reading skills, including the ability to locate relevant source material and select appropriate information to complete academic tasks

Demonstrate a range of academic research skills, including selecting and refining research questions, conducting small-scale research, and presenting and analysing this research

Demonstrate a range of academic writing skills, including following structural outlines for academic tasks (e.g. a research report), using academic language, and applying academic integrity requirements.”

Step 2: Generate the Scoring Scale and Criteria

The next step involves generating the scoring scale and defining the criteria that will be used to assess the task. The AI prompt should specify the number of performance levels and identify any specific aspects of the task that need to be evaluated.

- AI Prompt:

"Use the following scoring scale for the rubric: [Paste in the scoring scale]. Include the following criteria for each element of the scoring scale mentioned above: [Paste in the desired criteria/components]. For each of the criteria and each scoring scale, generate a descriptor based on the objectives provided."

For the EAP121 example, we can say “[ 0, 5, 10, 15, 20, and 25]

Include the following criteria ( in total) for each element of the scoring scale I just mentioned above: [ Task1, Task 2, and organization]”

Step 3: Develop Descriptors for Each Performance Level

Descriptors for each performance level should emphasize the quality of work rather than merely the quantity. This approach ensures that the rubric provides meaningful feedback, enabling students to make informed improvements in their performance.

- AI Prompt:

"For each of the criteria and each scoring scale, generate a descriptor that highlights the depth of understanding, clarity of communication, adherence to conventions, and accuracy of information, among other qualitative aspects."

For the EAP121 example, we can say “For Task 1, please focus specifically on the following elements related to idea development:

- Research aims

- Hypotheses

- Number of sources used

- Graphs included

An example of the description for a score of 10 for Task 1 is provided below:

- Ideas/information/data are adequately developed, but there may be a lack of clarity.

- Research aim/s and related hypotheses are stated.

- 6 Sources are used to provide ideas; at least 3 of them are of a reasonable academic quality.

- The report must include 2 – 4 graphs.

For Task 2, please focus specifically on the following elements:

- Source use

- Paraphrasing accuracy

- Reporting techniques

- Use of reporting verbs

An example of the description for a score of 10 for Task 2 is provided below:

- Source evidence is clearly convincing about half the time. Some paraphrases are accurate.

- No fake, misattributed, sources are used.

- Source integration techniques are repetitive”

For the organization of the report, please focus on the following elements:

- Overall organization of the report

- Cohesion between and within each section

- Use of cohesive devices

An example of the description for a score of 10 for Organization is provided below:

- The report includes all the main sections suggested.

- There is cohesion connecting appropriate report sections the vast majority of the time.

- Uses a range of cohesive features with some over-, under-, or misuse, but errors do not reduce clarity”

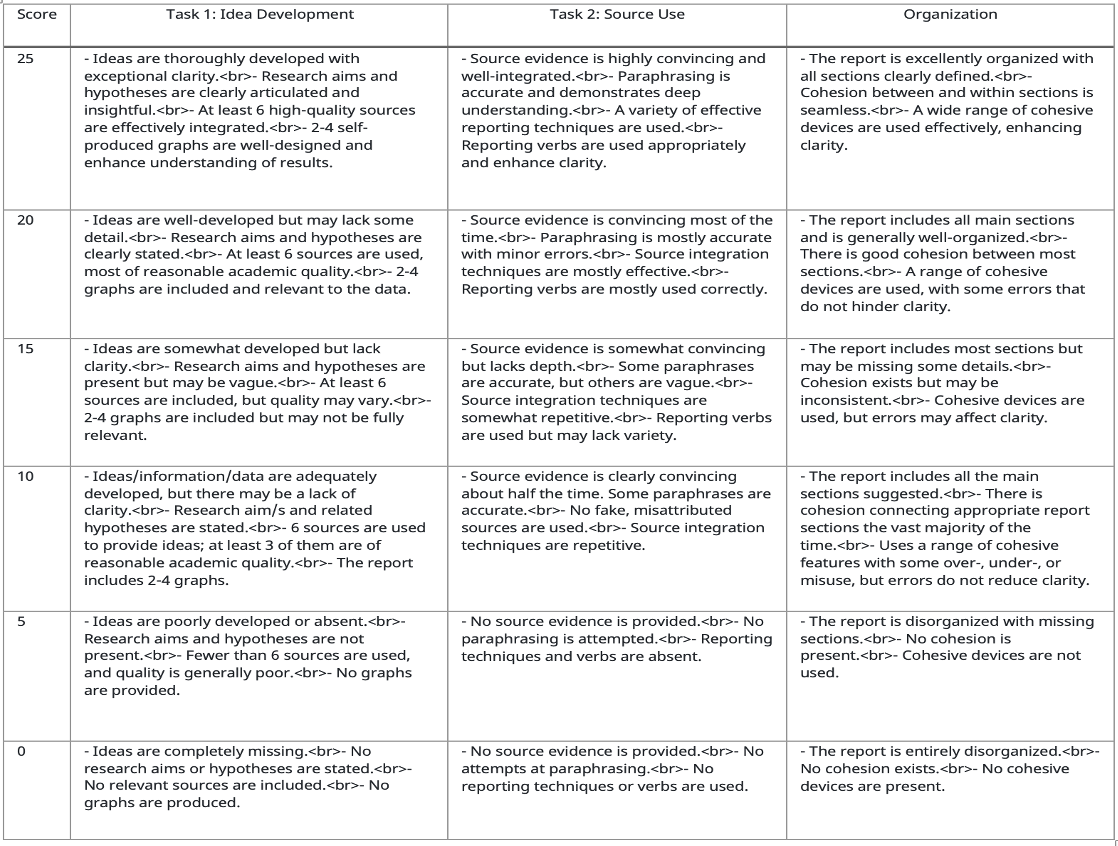

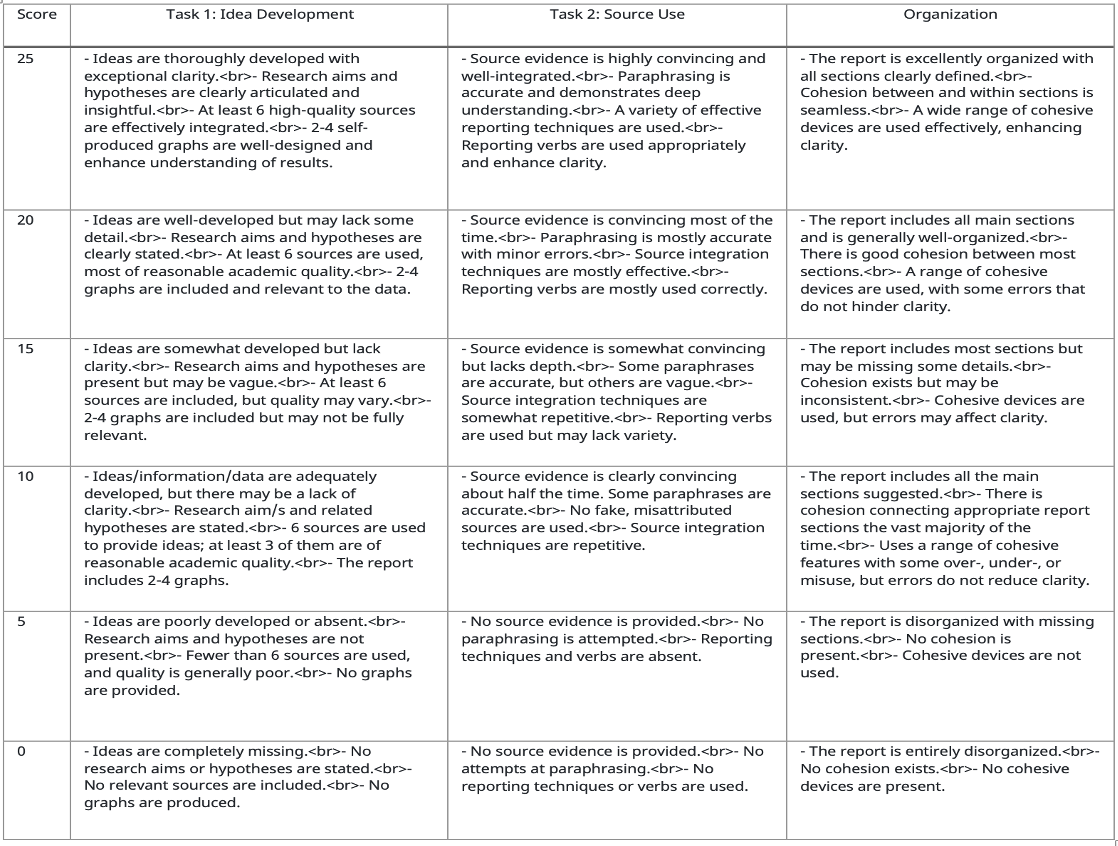

When we put all of the AI prompts together into one prompt, we generated the following rubric (see pic 3).

(Pic 3: Resulting Rubric)

At this stage, we can adjust the rubric as needed or adjust our prompt. The AI-generated descriptors are not intended to be "plug and play." Educators should modify the language to ensure it accurately aligns with the course’s specific standards and expectations of the course.

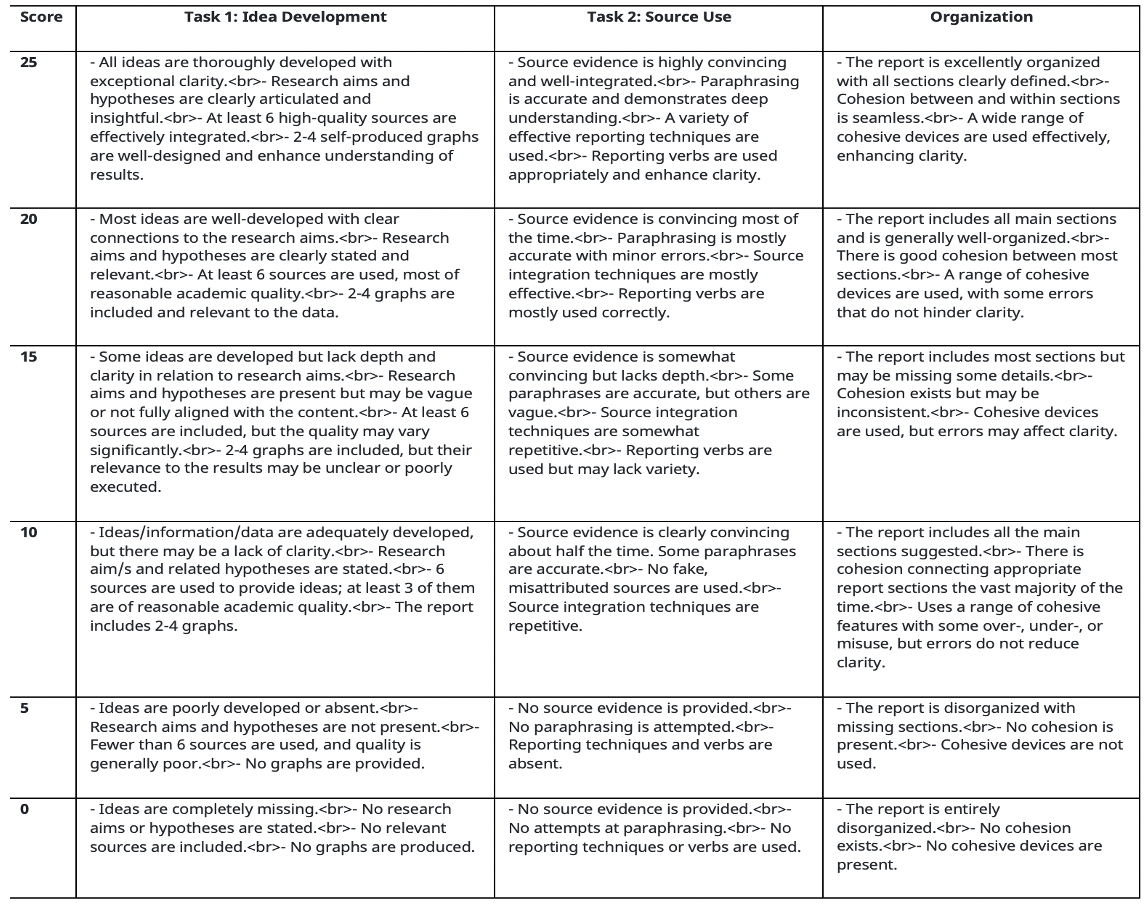

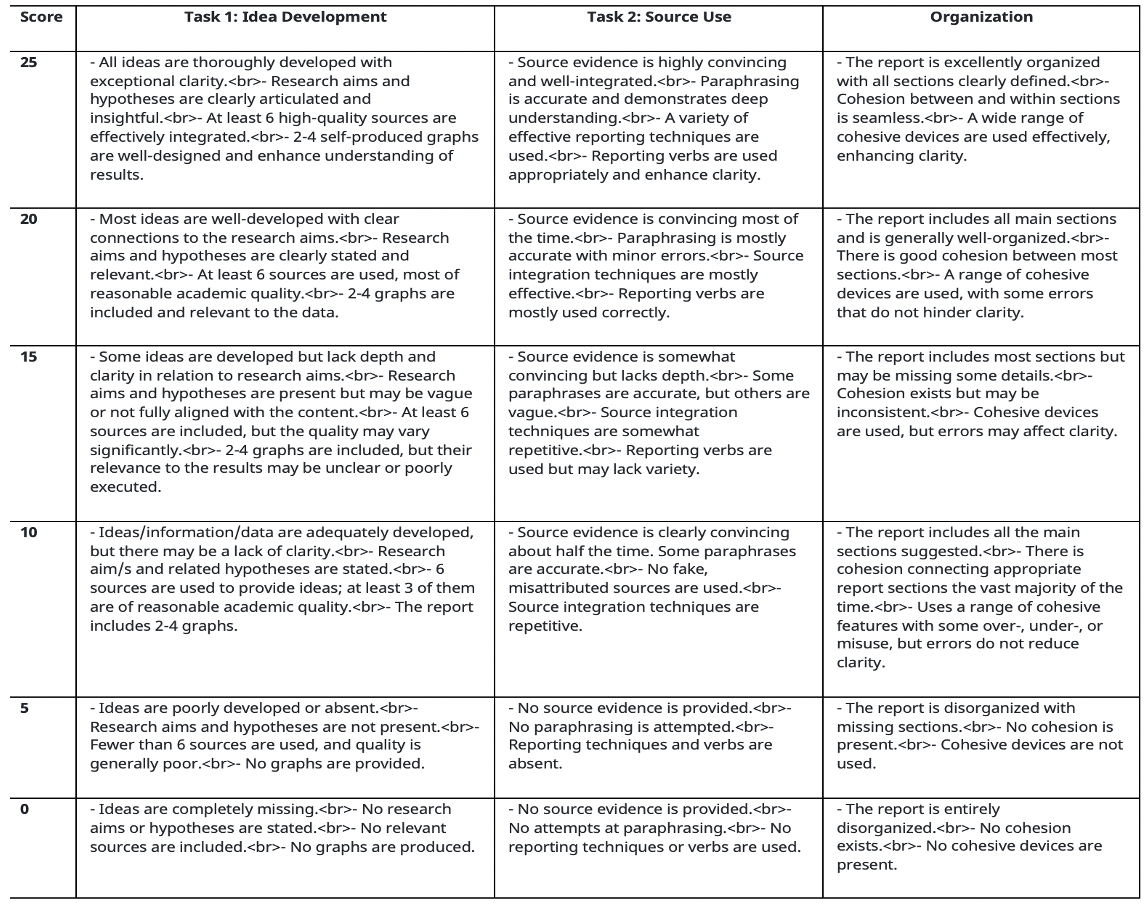

Step 4: Customize and Refine the Rubric

Once the AI generates the rubric, it is important to review and refine the descriptors to ensure their alignment with the specific task and learning objectives. The rubric should be pilot-tested with sample student work to identify any areas that need further adjustment.

For the EAP121 example, we can say “Please replace the idea descriptions in the scoring scales of 15, 20, and 25 with 'some ideas,' 'most ideas,' and 'all ideas.”

“Please ensure that the description of idea development in scoring scale 15 is more challenging to achieve than that in scoring scale 10”

Please refer to Pic 4 for an improved version.

Pic 4: An Improved EAP121 Rubric

AI Prompt Analysis: Validity, Reliability, Fairness, and Efficiency

The AI prompts used to create the holistic rubric for EAP047 and EAP121 were crafted to ensure adherence to the principles of validity, reliability, fairness, and efficiency:

- Validity: The prompt explicitly incorporates course learning objectives, ensuring that the rubric aligns with the intended outcomes of the course. By linking the criteria to the specific goals of the course, the rubric accurately measures what students are expected to achieve.

- Reliability: The prompt instructs the AI to generate descriptors that focus on the quality of work rather than just quantity. This focus promotes consistency in how different evaluators might interpret and apply the rubric, thereby reducing variability in grading.

- Fairness: The prompt emphasizes student-friendly language and clear, transparent criteria. This ensures that all students understand the expectations, creating an equitable assessment environment.

- Efficiency: By generating a rubric in table form with specific descriptors for each scoring level, the AI prompt simplifies the rubric creation process, saving educators time while maintaining accuracy and detail.

When these components are effectively integrated, the AI generates a rubric that is well-aligned with the four indicators. Educators can then further refine the rubric to ensure it meets the specific needs of their students.

Conclusion

The integration of AI-powered tools in rubric design presents significant advantages, such as increased efficiency, enhanced consistency, and improved alignment with learning objectives. However, the effective implementation of AI-generated rubrics necessitates educators to carefully customize and continuously evaluate them to ensure they address the specific needs of both students and educators. Additionally, it is also important to compare the AI-generated rubric with existing established rubrics to ensure alignment with proven standards and practices. Following the finalization of the rubric, educators should gather feedback from students and colleagues to assess its effectiveness and make any necessary adjustments.

References

Allen, D. and K. Tanner (2006). Rubrics: Tools for making learning goals and evaluation criteria explicit for both teachers and learners. CBE – Life Sciences Education 5: 197-203.

Barney, S., Khurum, M., Petersen, K., Unterkalmsteiner, M., & Jabangwe, R. (2011). Improving students with rubric-based self-assessment and oral feedback. IEEE transactions on Education, 55(3), 319-325.

Campbell, A. (2005). Application of ICT and rubrics to the assessment process where professional judgement is involved: the features of an e‐marking tool. Assessment & Evaluation in Higher Education, 30(5), 529-537.

Chen, H. J., She, J. L., Chou, C. C., Tsai, Y. M., & Chiu, M. H. (2013). Development and application of a scoring rubric for evaluating students’ experimental skills in organic chemistry: An instructional guide for teaching assistants. Journal of chemical education, 90(10), 1296-1302.

Felder, R. M., & Brent, R. (2016). Teaching and learning STEM: A practical guide. San Francisco: Jossey-Bass.

Jonsson, A. (2014). Rubrics as a way of providing transparency in assessment. Assessment & Evaluation in Higher Education, 39(7), 840-852.

Menéndez-Varela, J. L., & Gregori-Giralt, E. (2018). Rubrics for developing students’ professional judgement: A study of sustainable assessment in arts education. Studies in Educational Evaluation, 58, 70-79.

Nolen, A., (2024). AI-Powered Rubrics. Presented at the Georgia Tech Symposium for Lifetime Learning, Georgia Institute of Technology, Atlanta, GA.

Panadero, E., & Romero, M. (2014). To rubric or not to rubric? The effects of self-assessment on self-regulation, performance and self-efficacy. Assessment in Education: Principles, Policy & Practice, 21(2), 133-148.

Reddy, Y. M., and H. Andrade. (2010). ‘A Review of Rubric Use in Higher Education.’ Assessment & Evaluation in Higher Education 35(4):435–48.